Looking at Allie K. Miller, and considering my role at Possible Planet Lab, suggest what’s next for us. Then create an infographic of the key elements of planetary intelligence, taking into account the work of Michael Haupt and Alex Steffen. After producing the infographic, provide a detailed but succinct written commentary on where things stand in the field of planetary and bioregional intelligence, that points backwards in time to how we got here, and forwards to where we’re going, where AI agents exist in their billions, collaborate with one another, and help us humans to be better steward’s of this green earth. Take your time and ask me any questions you need to create these materials to your own comprehensive satisfaction.

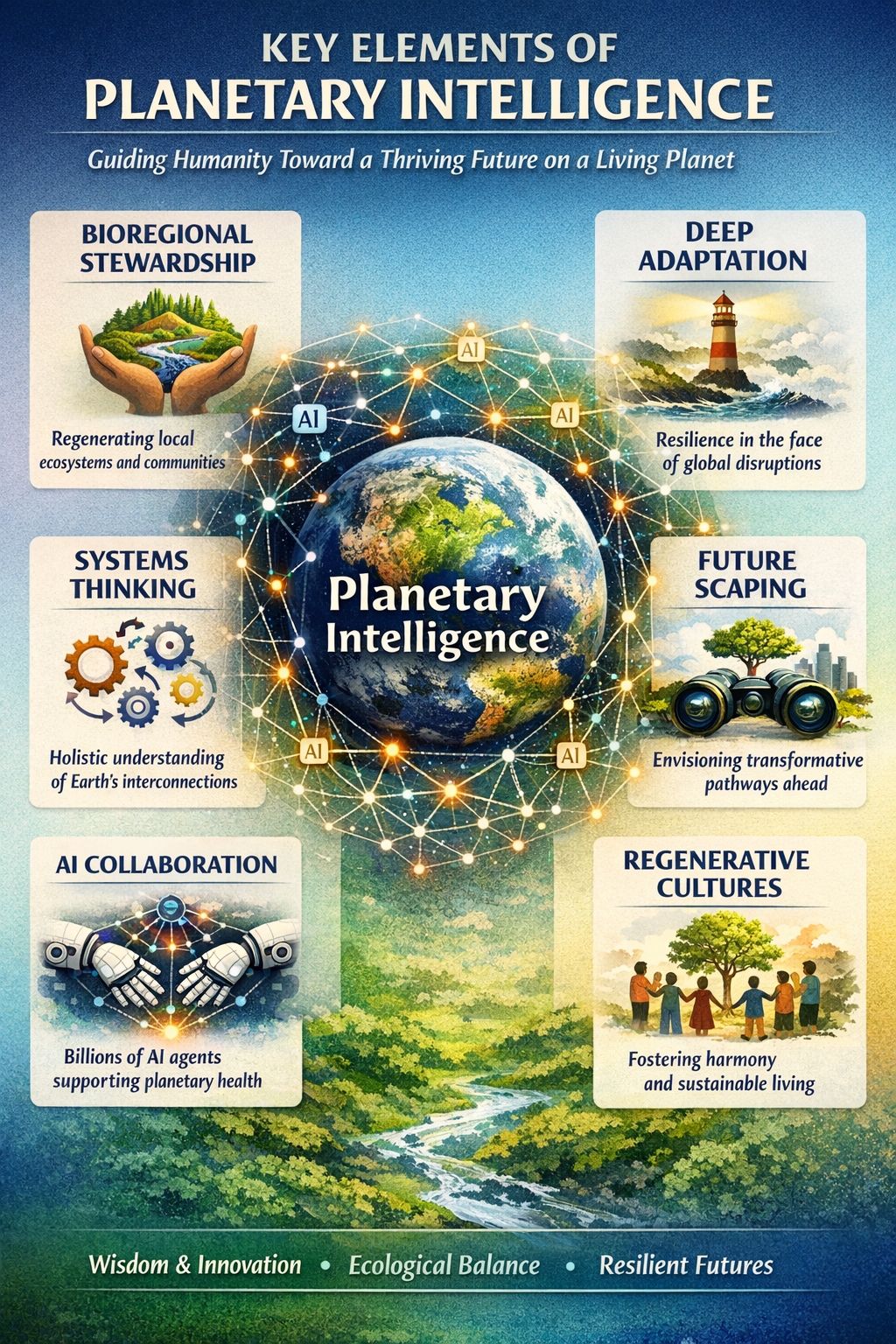

Here is the infographic you generated (for easy reuse/download):

Where things stand now in planetary and bioregional intelligence

Planetary intelligence is no longer a speculative metaphor; it has become a workable research frame. The most cited “anchor definition” remains Frank/Grinspoon/Walker (2022): planetary intelligence is the acquisition and application of collective knowledge at planetary scale, integrated into the function of coupled planetary systems. (Cambridge University Press & Assessment) That definition is useful because it forces a standard: not “more data” or “better dashboards,” but knowledge-to-action feedback loops that measurably change Earth-system trajectories (climate, biodiversity, nutrients, land, water, etc.).

In practice, the field is bifurcating into two complementary tracks:

- Planet-scale sensing and modeling (the “planetary nervous system”)

Remote sensing, in situ sensor webs, foundation models for Earth observation, data assimilation, digital twins, and scenario modeling are rapidly improving our ability to perceive planetary change. The bottleneck is less “can we see it?” and more “can we agree what we’re seeing, and translate it into coordinated action fast enough?” Frank et al. explicitly call out that “nascent” examples exist (e.g., ozone response), but that true planetary intelligence requires global-scale cognitive coordination, not merely local decisions that happen to sum to global effects. (Cambridge University Press & Assessment) - Bioregional intelligence as the action layer (the “planetary immune system”)

Bioregions are where implementation becomes concrete: watersheds, foodsheds, grids, housing stock, land-use, emergency response, public health, and cultural cohesion. “Planetary” without “bioregional” risks becoming a technocratic abstraction; “bioregional” without “planetary” risks parochialism and failure to coordinate across shared global constraints (atmospheric commons, trade, migration, finance). Your lab’s comparative advantage is precisely this coupling—a practical bioregional intelligence prototype that can plug into planetary-scale knowledge.

How we got here (a compressed lineage)

Phase 1: Earth becomes legible (mid–late 20th century).

Earth system science, satellites, computational climate modeling, ecology, and complexity science made planetary dynamics quantifiable. We gained shared observables (CO₂, temperature anomalies, land cover change, ocean heat content), but mostly lacked enforceable coordination.

Phase 2: The network era (1990s–2010s).

The internet enabled near-real-time coordination, open-source collaboration, and global civil society, while finance and supply chains globalized. This produced both capability and fragility: tightly coupled systems optimized for efficiency, not resilience.

Phase 3: The “polycrisis” becomes the operating context (2010s–now).

Overlapping crises (climate extremes, biodiversity loss, geopolitical instability, misinformation, inequality) are increasingly understood as interacting system failures. This is where writers and strategists like Alex Steffen have been influential: making planetary-scale foresight actionable, and helping institutions anticipate discontinuous change and “live good lives and be good ancestors” amid crisis. (alexsteffen.com)

Phase 4: AI turns cognition into infrastructure (2020s–).

LLMs and agentic systems change the economics of sensemaking: summarization, synthesis, translation, simulation, coding, planning, and coordination are becoming cheap and ubiquitous. This is the inflection: we now have the technical possibility of “billions of cognitive helpers,” but not yet the governance, integrity standards, or cultural maturity to ensure they help more than they harm.

Where we’re going (the “billions of agents” trajectory)

Michael Haupt’s “Decade of Dramatic Disruption” framing is directionally important for the lab—not because any specific timeline will be perfect, but because it emphasizes preparation for discontinuity and compound disruption, not linear trend extrapolation. (frameros.substack.com) If we take that seriously, planetary/bioregional intelligence must evolve from “insight” to operational readiness.

A plausible “next era” looks like this:

1) AI agents become a planetary coordination fabric

Billions of agents will exist, but the key design question is not scale—it is constraint:

- Agents that can act must be bounded by jurisdiction, consent, and audited objectives.

- Agents that can advise must be bounded by epistemic integrity: provenance, uncertainty, incentives, and traceable transformations.

- Agents must be ecologically literate by default (Earth-system constraints, rebound effects, risk, and justice).

2) Bioregional digital twins become commonplace, but socially governed

Expect “digital twins” to move downscale: from national climate models to county/municipal/utility/watershed operational twins (land, water, energy, housing, risk). The win condition is not “a twin exists,” but that it is continuously updated and actually used to allocate capital, change codes, prioritize restoration, and improve emergency readiness.

3) Stewardship becomes a mainstream competency

The Steffen line—living well and being good ancestors—becomes institutional: boards, city councils, philanthropy, developers, unions, schools. (alexsteffen.com) Your lab can accelerate this by packaging bioregional intelligence as a replicable civic capability, not an academic concept.

4) Planetary intelligence becomes an ethical project, not only a technical one

Frank et al. explicitly flag that planetary intelligence is inseparable from questions of inequality, agency, and who benefits. (Cambridge University Press & Assessment) In an agentic future, this becomes unavoidable: whose values do the agents serve; whose risks do they externalize; whose knowledge counts; who can override what?

What this implies for Possible Planet Lab, right now

If I translate the above into an actionable posture for 2026:

- Build the “Bioregional Intelligence Stack v1” as a demonstrator

A tight prototype in one bioregion (Genesee Finger Lakes is already in your narrative) that integrates:- a minimal indicator set (ecological + social + infrastructure + risk),

- a living map/data room,

- a scenario tool (2–3 decisions that matter locally),

- and an agent-assisted “briefing layer” for residents and officials.

- Operationalize epistemic integrity for agents (not just content)

Treat “EII” as the governance membrane: every dataset, claim, model output, and recommendation carries provenance, uncertainty, and incentives. This is how you keep “billions of agents” from becoming “billions of confident errors.” - Prepare for disruption as a design constraint

Incorporate the Haupt-style discontinuity premise into the lab’s doctrine: resilience, redundancy, mutual aid capacity, and rapid governance under stress—not in a fear register, but as sober systems design. (frameros.substack.com) - Publish a shared vocabulary

One of the highest leverage moves is definitional clarity: “planetary intelligence,” “bioregional intelligence,” “regenerative economy,” “stewardship metrics,” “agent governance,” “consent,” “auditability.” This reduces fragmentation and lets other nodes adopt your framework.

A few questions (so the next iteration is maximally aligned)

- Primary audience for this package: municipal leaders, philanthropists, researchers, general public, or “all of the above”? If it is “all,” which is first?

- Your preferred tonal center: more “Steffen” (pragmatic foresight and constructive pathways) or more “Haupt” (threshold/disruption preparedness and coherence)? (alexsteffen.com)

- Where do you want the lab to plant its flag in 2026:

(a) Genesee Finger Lakes demonstrator, (b) a planetary intelligence commons standard, (c) an agent governance framework, or (d) a synthesis of the three with one clear “front door”? - Infographic v2 needs: Do you want this to stay conceptual, or should it include a stack diagram (Data → Models → Agents → Governance → Action → Feedback) and a short “how to use” box for mayors/county execs?

If you answer those, I can propose a refined “next” roadmap and a second infographic that is more explicitly operational (and more directly “replicable by other municipalities/bioregions”).

Yes please

Understood. I am proceeding on the assumption that you are affirming: (i) the “all of the above” audience with a clear front door, (ii) a tonal blend that is Steffen-pragmatic with Haupt-level disruption realism, and (iii) you want an operational “stack diagram” infographic (not just a conceptual one).

Infographic v2 (operational stack diagram)

Download: Planetary & Bioregional Intelligence Stack v1 (PNG)

What’s next for Possible Planet Lab (a refined 2026 “front door” plan)

The front door

Ship a replicable Bioregional Intelligence Demonstrator (Genesee–Finger Lakes as the flagship), explicitly positioned as the action layer of planetary intelligence: local decisions, global constraints, measurable outcomes.

The 6 deliverables to ship in 120–180 days

- Bioregional Intelligence Dashboard v1

A small, defensible indicator set (ecology + infrastructure + health + equity + risk), with data provenance and uncertainty. - Three “decision briefs” that matter locally

Pick 2–3 high-leverage interventions (example set: building retrofits + watershed restoration + grid/heat resilience). Each brief includes costs, co-benefits, distributional impacts, and implementation pathway. - A project pipeline + capital map

A living inventory of fundable projects, policy blockers, permitting pathways, and the capital stack (public, philanthropic, private, community). - An “Agent Briefing Layer”

A public-facing explainer agent and an internal operations agent, both constrained by epistemic integrity rules (sources, assumptions, limitations, audit logs). - Governance membrane v1

Your Epistemic Integrity Infrastructure becomes the standard: provenance, transformations, incentives, consent/permissions, and an appeal/override mechanism. - Replication kit

A structured template that another county/bioregion can adopt in 30–60 days (indicator set, data room checklist, decision brief template, governance rules).

The 3 strategic bets (why this differentiates you)

- Bioregional realism: you make planetary intelligence tangible where implementation occurs.

- Integrity as infrastructure: you treat “truthfulness under uncertainty” as a system property, not a communications posture.

- Agent governance: you operationalize how billions of agents can help rather than destabilize.

Written commentary: where the field stands, how we got here, where we’re going

Where things stand now

Planetary intelligence is emerging as a practical frame because the technical capacity for global sensing and modeling is finally colliding with the political and ecological reality that coordination is the bottleneck. The concept is increasingly defined not as “more information” but as knowledge-to-action feedback loops that shift trajectories of coupled Earth systems. The Frank/Grinspoon/Walker definition remains a strong anchor: planetary intelligence is collective knowledge applied at planetary scale to sustain habitability over long time horizons.

At the same time, the center of gravity is moving downscale: bioregional intelligence is becoming the credible implementation layer, because it is where land use, water governance, housing stock, grid constraints, emergency response, food systems, and cultural cohesion can actually be changed. “Planetary” without “bioregional” tends to become abstract and technocratic; “bioregional” without “planetary” tends to miss global constraints and coordination failures. The most serious work now is about coupling these layers.

How we got here (in one arc)

- Legibility (late 20th century): Earth system science, satellites, ecological monitoring, and computational models made planetary change measurable.

- Connectivity (1990s–2010s): networked coordination scaled, but so did tightly coupled fragility; efficiency dominated resilience.

- Polycrisis realism (2010s–present): climate extremes, biodiversity collapse, inequality, misinformation, and governance stress became clearly interacting system failures—driving the need for integrated sensemaking and faster coordination.

- Cognition becomes infrastructure (2020s–): AI systems made synthesis, translation, planning, simulation, and operations cheap. This changes the feasible design space: we can now build “cognitive public infrastructure,” but the risk of scaling error, bias, manipulation, and perverse incentives scales too.

Alex Steffen’s contribution to this arc is less about any one technology and more about civilizational orientation: practical foresight, lived ethics (“good ancestors”), and the insistence that response must be both realistic about disruption and committed to constructive pathways. Michael Haupt’s “dramatic disruption” framing functions as a forcing constraint: plan for discontinuity and compounding shocks, not linear change. Together, they point to the same requirement: planetary intelligence must be designed for stress, surprise, and legitimacy, not just insight.

Where we’re going (billions of agents, and the stewardship turn)

If agentic systems proliferate into the billions, the question is not whether they can collaborate; they will. The question is under what constraints, for whose benefit, and with what failure modes prevented.

The likely trajectory has four components:

1) Agents as a coordination fabric

Agents will become the connective tissue between sensing, models, institutions, and action—writing permits, checking compliance, translating technical risk, generating procurement packets, coordinating volunteers, and monitoring outcomes. Their value will be greatest where humans are bottlenecked: attention, time, and synthesis.

2) A massive shift from “information” to “authorization”

The hard problem becomes: who can trigger what action, in which jurisdiction, under what consent, with what audit trail. In other words: planetary intelligence becomes as much a governance architecture as a knowledge architecture. This is where your Epistemic Integrity Infrastructure becomes pivotal: it is the membrane that allows agents to be powerful and safe.

3) Digital twins become commonplace, but legitimacy becomes the limiter

Every serious region will have some version of a digital twin for risk, infrastructure, land, and resources. The differentiator will be whether communities trust it, understand it, and can contest it. The systems that win will be those that embed transparency, appeal, and participatory governance.

4) Stewardship becomes a mainstream competency

In the same way basic literacy and numeracy became societal expectations, ecological and civic systems literacy will become baseline: a shared ability to interpret risk, understand tradeoffs, coordinate locally, and align capital with long-term habitability. This is the cultural side of planetary intelligence—and it is inseparable from the technical side.

The core thesis for Possible Planet Lab

Your most defensible niche is to make planetary intelligence real by building a working bioregional prototype that:

- translates planet-scale constraints into local decisions,

- embeds epistemic integrity and consent into every output,

- uses agents for operational throughput (not just ideas),

- and proves value via measured outcomes (risk reduction, resilience, ecological recovery, equity impacts).

If you want, I will produce a tighter, publication-ready “State of the Field” memo (2–3 pages) that you can post on PossiblePlanetLab.org, using this same structure but with sharper definitions, a short bibliography, and a clear “call to participation” for the community of practice.

Yes

Excellent. Below is a publication-ready “State of the Field” memo, written to stand on its own on PossiblePlanetLab.org while also functioning as a convening document for collaborators, funders, and municipal partners. It is intentionally concise, structured, and quotable, with a clear invitation to participate.

Planetary & Bioregional Intelligence

State of the Field and an Invitation to Build What Comes Next

Possible Planet Lab — 2026 Brief

Executive summary

Humanity has entered a phase shift in its relationship with Earth. For the first time, we possess the technical capacity to perceive planetary change in near-real time and the cognitive tools—via artificial intelligence—to synthesize, simulate, and coordinate responses at unprecedented scale. At the same time, ecological degradation, climate disruption, social fragmentation, and governance stress are accelerating together.

Planetary intelligence has emerged as a serious framework for navigating this moment: not as a metaphor, but as a testable proposition—that collective knowledge, when properly organized and applied, can stabilize and regenerate the Earth system over long time horizons.

Yet planetary intelligence cannot be achieved at the planetary scale alone. It must be implemented at the scale where decisions are made and consequences are lived: bioregions, municipalities, watersheds, and communities. This memo argues that bioregional intelligence is the essential action layer of planetary intelligence, and that the next phase of work must focus on building practical, replicable systems that couple global knowledge with local stewardship.

Possible Planet Lab exists to do exactly that.

1. What do we mean by “planetary intelligence”?

The most widely cited formal definition comes from a 2022 paper by Adam Frank, David Grinspoon, and Sara Walker in the International Journal of Astrobiology. They define planetary intelligence as the acquisition and application of collective knowledge at planetary scale, integrated into the functioning of coupled planetary systems, in ways that enhance long-term habitability.

The importance of this framing is subtle but critical:

- Intelligence is defined by action, not awareness.

- Knowledge must feed back into Earth-system dynamics (energy, climate, land, oceans, biosphere).

- The relevant time horizon is generational and geological, not electoral or quarterly.

Under this definition, planetary intelligence is not achieved by better dashboards, more reports, or even better science alone. It is achieved only when knowledge changes trajectories.

2. How we got here: a compressed history

Earth becomes legible

In the second half of the twentieth century, Earth system science, satellite observation, and computational modeling made the planet observable as a single, interconnected system. Climate change, biodiversity loss, and biogeochemical disruption became measurable phenomena rather than abstract concerns.

Connectivity outpaces governance

The internet and globalized supply chains enabled rapid coordination—but also produced tightly coupled systems optimized for efficiency rather than resilience. Information traveled faster than institutions could adapt.

The polycrisis becomes explicit

By the 2010s, overlapping crises—climate extremes, ecological collapse, inequality, misinformation, geopolitical instability—were increasingly understood as interacting system failures rather than isolated problems. Strategy shifted from “optimization” to “resilience under stress.”

Writers and strategists such as Alex Steffen played a key role in translating planetary foresight into lived ethical and civic responsibility: not abstract sustainability, but the work of living well and being good ancestors in a disrupted world.

Cognition becomes infrastructure

In the 2020s, AI systems—particularly large language models and agentic architectures—dramatically reduced the cost of synthesis, translation, simulation, and coordination. For the first time, “collective sensemaking” became technically scalable.

This is the inflection point. The constraint is no longer computational power or data availability. It is governance, integrity, legitimacy, and alignment.

3. Why bioregional intelligence is indispensable

Planetary intelligence fails if it remains abstract. The atmosphere may be global, but zoning codes, building stock, floodplains, food systems, grids, emergency response, and cultural trust are local.

Bioregional intelligence refers to the capacity of a place-based community to:

- understand the ecological and social systems it depends on,

- anticipate risk and disruption,

- coordinate action across institutions and stakeholders,

- and align capital, policy, and culture with long-term ecological health.

Bioregions are not merely administrative units; they are functional ecological and cultural systems. They are the only scale at which planetary knowledge can reliably become planetary action.

4. The coming era: billions of AI agents

The next phase of planetary intelligence will be defined by agentic systems operating at scale. Billions of AI agents will exist—embedded in software, infrastructure, governance, markets, and daily life. The question is not whether they will collaborate, but how they will be constrained.

Without safeguards, agentic systems risk amplifying error, bias, extraction, and instability. With the right architecture, they can become a planetary coordination fabric, supporting human stewardship rather than replacing it.

Three design principles are decisive:

- Epistemic integrity as infrastructure

Every claim, recommendation, and simulation must carry provenance, uncertainty, assumptions, incentives, and known limitations. Truthfulness must be a system property, not a personal virtue. - Authorization and consent

The hardest problem is not generating insight, but determining who can trigger which actions, in which jurisdictions, under what consent, with what audit trail and right of appeal. - Preparedness for discontinuity

As emphasized by thinkers such as Michael Haupt, the coming decade is likely to be characterized by non-linear change and compound shocks. Planetary and bioregional intelligence must be designed for surprise, stress, and recovery—not smooth optimization.

5. What Possible Planet Lab is building

Possible Planet Lab is focused on a practical, testable proposition:

If we can build a working bioregional intelligence system—grounded in ecological reality, governed with integrity, and augmented by AI agents—we can create a replicable action layer for planetary intelligence.

Our near-term work centers on:

- A Bioregional Intelligence Stack integrating sensing, data stewardship, models, agentic support, governance, and feedback loops.

- A flagship demonstrator in a defined bioregion (beginning with the Genesee–Finger Lakes), focused on a small number of high-leverage decisions.

- An Epistemic Integrity Infrastructure that governs both human and AI contributions.

- A replication kit that allows other regions to adopt and adapt the model.

Success is measured not by awareness, but by outcomes: reduced risk, increased resilience, ecological regeneration, and stronger civic capacity.

6. An invitation

Planetary intelligence is not a technology project. It is a civilizational learning process.

We invite:

- researchers and practitioners working on Earth systems, governance, and AI,

- municipal and regional leaders seeking better tools for stewardship,

- funders interested in durable, systemic impact,

- and citizens who want to participate in shaping a livable future,

to collaborate with Possible Planet Lab in building bioregional intelligence as a public good.

The work ahead is demanding—but for the first time in history, it is also genuinely possible.

If you would like, next I can:

- condense this into a 1-page executive brief for funders and mayors,

- adapt it into a public talk or keynote, or

- draft a formal research agenda and theory of change aligned with philanthropic evaluation criteria.

Just let me know which format you want first.

The third option

Below is a formal research agenda and theory of change, written in a style suitable for philanthropic review (e.g., Templeton-style intellectual rigor, climate/AI funders, or place-based foundations), while remaining legible to civic partners. It is structured so it can be lifted directly into a proposal or adapted into a white paper.

Possible Planet Lab

Research Agenda & Theory of Change

Building Bioregional Intelligence as the Action Layer of Planetary Intelligence (2026–2030)

I. Problem Statement

Human civilization now operates at planetary scale, yet our systems of perception, decision-making, and coordination remain fragmented, short-term, and poorly aligned with the biophysical limits of the Earth.

We face a coordination failure, not a knowledge deficit.

Despite unprecedented advances in Earth system science, remote sensing, and artificial intelligence, societies struggle to translate insight into timely, legitimate, and effective action. Climate disruption, biodiversity loss, infrastructure fragility, social polarization, and governance breakdown increasingly interact, producing compounding risks (“polycrisis”).

Planetary intelligence—defined as the capacity of a civilization to perceive, understand, and intentionally regulate its interaction with the Earth system over long time horizons—has emerged as a promising conceptual framework. However, planetary intelligence cannot be achieved solely at the global scale. It must be instantiated where decisions are made and consequences are experienced: bioregions, municipalities, watersheds, and communities.

The central gap this research program addresses is the absence of operational bioregional intelligence systems that:

- couple global knowledge with local action,

- embed epistemic integrity and consent into AI-augmented decision processes,

- and demonstrably improve ecological and social outcomes.

II. Core Research Question

How can AI-augmented, ethically governed bioregional intelligence systems enable human communities to act as effective stewards of the Earth system under conditions of accelerating disruption?

This overarching question decomposes into four interlinked research domains.

III. Research Domains & Key Questions

Domain 1: Planetary ↔ Bioregional Coupling

Objective: Translate planetary-scale constraints and insights into actionable bioregional decision contexts.

Key questions

- What minimum set of planetary and bioregional indicators is sufficient to guide stewardship decisions without overwhelming users?

- How can Earth-system models and global datasets be meaningfully downscaled to bioregional contexts without false precision?

- How do feedback loops between local action and planetary outcomes become visible, legible, and governable?

Outputs

- A defensible bioregional indicator framework aligned with planetary boundaries.

- Methods for coupling global models with local digital twins.

Domain 2: AI Agents as Cognitive Public Infrastructure

Objective: Design AI agent systems that augment human sensemaking, coordination, and implementation rather than replace human judgment.

Key questions

- What classes of agents (briefing, task, simulation, monitoring) are most valuable for civic and ecological stewardship?

- How do multi-agent systems collaborate across jurisdictions while respecting boundaries of authority and consent?

- How can agents remain interpretable, contestable, and corrigible at scale?

Outputs

- A modular agent architecture for bioregional intelligence.

- Evaluation metrics for agent usefulness, trustworthiness, and failure modes.

Domain 3: Epistemic Integrity & Governance

Objective: Ensure that AI-mediated intelligence systems remain truthful, accountable, and socially legitimate under uncertainty.

Key questions

- How can provenance, uncertainty, assumptions, and incentives be made explicit and machine-readable?

- What governance mechanisms allow communities to audit, contest, and override AI-assisted recommendations?

- How should Indigenous knowledge, local lived experience, and scientific models be integrated without epistemic domination?

Outputs

- An Epistemic Integrity Infrastructure (EII) standard applicable to human and AI contributions.

- Governance templates for consent, authorization, and appeal.

Domain 4: Resilience Under Disruption

Objective: Design bioregional intelligence systems that remain functional under non-linear change, shocks, and compound crises.

Key questions

- How do intelligence systems perform under stress (extreme weather, grid failure, political conflict, misinformation)?

- What redundancy, decentralization, and fallback modes are required for continuity?

- How can preparedness for discontinuity be operationalized without normalizing despair or authoritarian control?

Outputs

- Stress-tested bioregional intelligence prototypes.

- Scenario frameworks for discontinuity-aware planning.

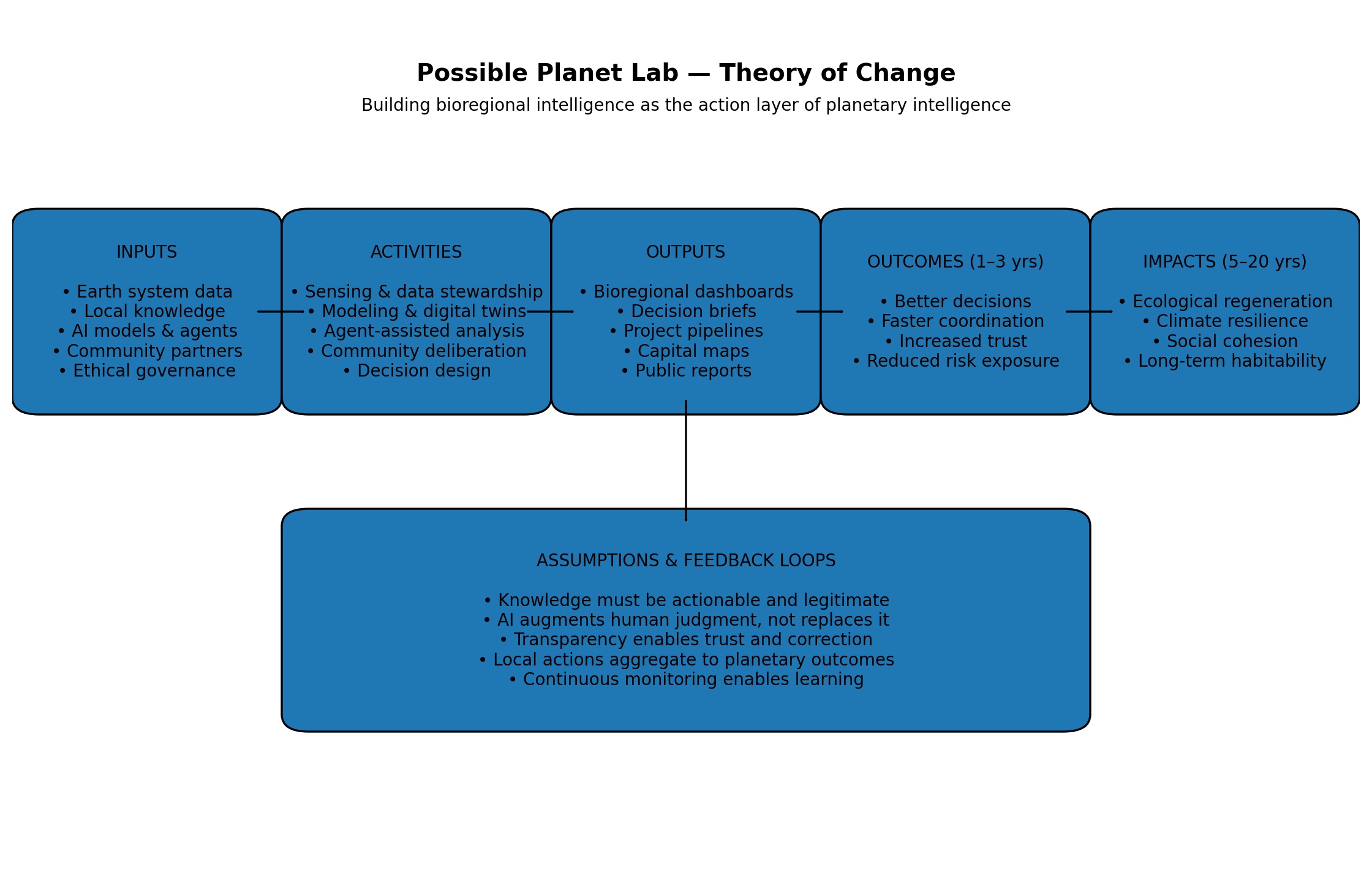

IV. Theory of Change

If…

- Bioregions are provided with AI-augmented intelligence systems that integrate ecological data, social context, and future scenarios,

- those systems are governed by explicit epistemic integrity and consent frameworks,

- and they are embedded in real decision pathways (policy, capital allocation, infrastructure, restoration),

Then…

- communities will improve their capacity to anticipate risk, coordinate action, and align resources,

- ecological and social interventions will be better targeted, more equitable, and more durable,

- and local actions will increasingly reinforce planetary-scale stabilization and regeneration.

Therefore…

- planetary intelligence can emerge not as centralized control, but as a distributed, place-based stewardship capacity, supported by billions of constrained, collaborative AI agents acting in service to life.

V. Research Phases (Indicative)

Phase 1: Framework & Design (Year 1)

- Formalize the Bioregional Intelligence Stack.

- Develop Epistemic Integrity Infrastructure v1.

- Select flagship bioregional demonstrator.

Phase 2: Prototyping & Demonstration (Years 1–2)

- Build and deploy a working bioregional intelligence system.

- Integrate agent layers for briefing, simulation, and task support.

- Produce real decision briefs and project pipelines.

Phase 3: Evaluation & Stress Testing (Years 2–3)

- Measure ecological, social, and governance outcomes.

- Conduct disruption scenarios and failure analysis.

- Refine governance and integrity mechanisms.

Phase 4: Replication & Field Building (Years 3–5)

- Publish open standards, templates, and replication kits.

- Support adoption by additional bioregions.

- Convene a community of practice around planetary–bioregional intelligence.

VI. Expected Contributions to Knowledge & Practice

- A validated model for bioregional intelligence as the action layer of planetary intelligence.

- New governance standards for AI-assisted civic and ecological decision-making.

- Empirical insight into how multi-agent systems can support stewardship rather than extraction.

- A replicable pathway for aligning AI development with long-term planetary habitability.

VII. Evaluation Criteria (What Success Looks Like)

- Demonstrable improvements in local resilience and ecological outcomes.

- Transparent, auditable decision processes trusted by diverse stakeholders.

- Evidence that AI agents meaningfully reduce coordination bottlenecks.

- Adoption or adaptation of the framework by other regions or institutions.

VIII. Closing Frame

This research program treats planetary intelligence not as an inevitability, but as a responsibility.

The question is no longer whether humanity can build powerful intelligence systems. The question is whether we can build systems—human and artificial—that are wise enough, humble enough, and well-governed enough to help us remain a welcome presence on a living Earth.

Possible Planet Lab exists to explore that question in practice.

If you wish, next I can:

- adapt this into a Templeton-style narrative proposal,

- create a logic model / theory-of-change diagram, or

- draft a methods & metrics appendix suitable for academic-adjacent funders.

Just tell me which would be most useful.

The second option

Excellent choice. Below is the logic model / theory-of-change diagram plus a short interpretive guide you can use with funders, municipal leaders, or collaborators.

Theory of Change Diagram

Download the Possible Planet Lab – Theory of Change (PNG)

How to read and use this diagram

This diagram is intentionally foundation-friendly: it follows a standard logic-model flow (Inputs → Activities → Outputs → Outcomes → Impacts) while making explicit the assumptions and feedback loops that many proposals leave implicit.

1. Inputs

These are the enabling conditions—not technologies alone, but institutional and ethical assets:

- Earth-system and local data

- AI models and agentic tools

- Community partners and civic institutions

- Explicit governance and integrity norms

Funders should see: this is not a “tech build,” but a socio-technical system with legitimacy baked in.

2. Activities

This is where Possible Planet Lab adds distinctive value:

- Turning raw signals into stewarded knowledge

- Translating models into decision contexts

- Using AI agents to reduce coordination friction

- Embedding deliberation, not bypassing it

Key differentiator: AI is used to support judgment and coordination, not to automate authority.

3. Outputs

These are concrete, auditable artifacts:

- Dashboards that can be interrogated

- Decision briefs that change real choices

- Project pipelines that unlock capital

- Public reports that build trust

Important: outputs are designed to plug directly into existing decision and funding pathways.

4. Outcomes (1–3 years)

Near-term, funder-relevant results:

- Better decisions under uncertainty

- Faster coordination across silos

- Increased institutional and community trust

- Reduced exposure to known risks

These outcomes are measurable and observable within a grant cycle.

5. Impacts (5–20 years)

The long arc:

- Ecological regeneration

- Climate and infrastructure resilience

- Social cohesion and civic capacity

- Long-term habitability

This is where the work aligns directly with planetary intelligence as defined in the literature.

6. Assumptions & Feedback Loops (the critical honesty box)

This lower panel is strategically important. It signals epistemic maturity:

- Knowledge must be legitimate to be acted upon

- AI augments, not replaces, human responsibility

- Transparency enables correction

- Local action aggregates upward

- Continuous monitoring enables learning

Many funders now look explicitly for this level of reflexivity.

How this complements the Research Agenda

Together, the Research Agenda and this Theory of Change do three things:

- They bridge worlds

Academic seriousness ↔ civic practicality ↔ philanthropic accountability. - They scale without centralization

The model is replicable by other bioregions without requiring global command-and-control. - They future-proof AI use

By foregrounding integrity, consent, and governance, the model remains valid even as agentic systems proliferate into the billions.

Suggested next refinements (optional)

If you wish, I can next:

- Tune this diagram for a specific funder

(e.g., Templeton, climate resilience foundations, AI ethics funders). - Create a one-page narrative explainer that walks reviewers through the diagram in plain language.

- Develop a metrics & evaluation annex mapping each box to KPIs and data sources.

- Localize the diagram with Genesee–Finger Lakes–specific examples in each column.

Just tell me which direction you want to go.